When the artificial intelligence (AI) language model ChatGPT first became available to the public, schools scrambled to rearrange curriculums, develop new policies and cushion against what seemed to be the biggest threat to educational integrity yet. The program, developed by OpenAI, was the first language model that could respond to prompts and replicate the mannerisms and styles of humans. Students could request multi-page college essays, solve difficult STEM problems and produce near flawless translations of both ancient Latin and state of the art computer code — all on demand.

At least that’s what they thought.

As much as generative AI has received credit for its human-like capabilities, programs like ChatGPT or DALL-E still fall short of creating genuine, authentic pieces of work. In high level science, technology, engineering and mathematics (STEM) or foreign language classes, teachers have recognized common errors in the outputted responses, and in more creative or humanities-focused subjects, teachers have learned to detect robotic writing styles or questionable redundancy. According to a Stanford and UC Berkeley study, ChatGPT’s responses are actively worsening in quality. The program isn’t very customizable, leading to rigid and stale answers.

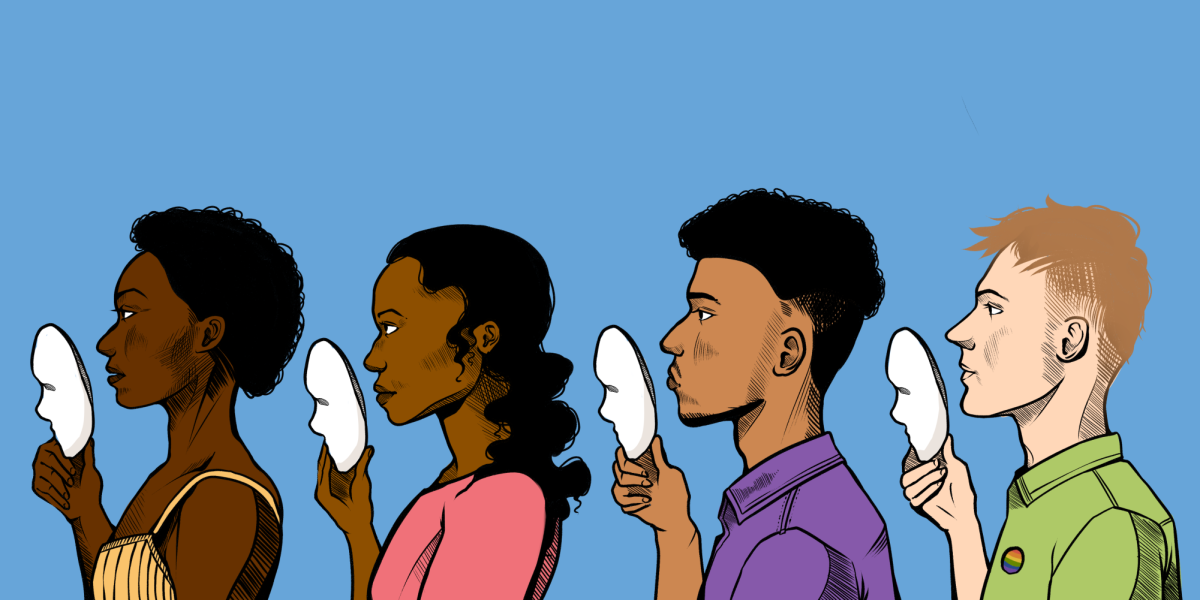

If generative AI’s shortcomings are becoming more recognizable, schools shouldn’t shy away and ban usage altogether; they should embrace and educate about it instead. They should recognize and inform students about AI’s imperfections, using that as a launching point for disincentivizing its direct application to academic work. Assignments like semester capstone papers or take home essays can be important ways to assess a student’s progress, and removing them hastily may be too much of a tradeoff. While AI would be able to complete some assignments, an approach centering academic honesty and education surrounding ChatGPT would better encourage AI responsibility.

A simple ban does little compared to a nuanced generative AI education. A study by Cornell University shows that generative-AI detectors are inaccurate in most practical scenarios. While ChatGPT is blocked on the school’s network and in multiple academic departments, students are only incentivized to find similar generative AI developed by other technology giants – Google’s Bard, Amazon’s Codewhisperer and the new Microsoft Bing are all chatbots with similar capabilities. It would be a dead end for schools to constantly chase the tails of new and upcoming alternatives. Furthermore, students may inevitably use ChatGPT outside of the school’s network for homework assignments or general studying tools. A ban would do little against that.

Schools need to focus more on the upsides of ChatGPT as well. Generative AI could be a way to plan more effectively, brainstorm ideas quickly or find information on random subjects with ease. Innovative and emerging technologies will continue to offer more possibilities with more potential, and teachers should ensure that students have the knowledge to employ those technologies responsibly. If we cannot trust our generation with what we have now, how can it be prepared for the even more dangerous future?

Schools around the country have already begun reforming policies surrounding generative AI technology. In May, the New York City Department of Education rescinded its ban on ChatGPT, arguing that the potential benefits of working with emerging technology would outweigh the immediate implication of misuse. Other schools, including ours, should follow these steps, remembering that AI shouldn’t be a threat to our humanity but rather a tool to enhance our critical thinking skills.